Bird’s eye view#

Background#

Data lineage tracks data’s journey, detailing its origins, transformations, and interactions to trace biological insights, verify experimental outcomes, meet regulatory standards, and increase the robustness of research. While tracking data lineage is easier when it is governed by deterministic pipelines, it becomes hard when its governed by interactive human-driven analyses.

Here, we’ll backtrace file transformations through notebooks, pipelines & app uploads in a research project based on Schmidt22 which conducted genome-wide CRISPR activation and interference screens in primary human T cells to identify gene networks controlling IL-2 and IFN-γ production.

Setup#

We need an instance:

!lamin init --storage ./mydata

Show code cell output

💡 creating schemas: core==0.46.1

✅ saved: User(id='DzTjkKse', handle='testuser1', email='testuser1@lamin.ai', name='Test User1', updated_at=2023-08-28 13:54:04)

✅ saved: Storage(id='yTQlccVf', root='/home/runner/work/lamin-usecases/lamin-usecases/docs/mydata', type='local', updated_at=2023-08-28 13:54:04, created_by_id='DzTjkKse')

✅ loaded instance: testuser1/mydata

💡 did not register local instance on hub (if you want, call `lamin register`)

Import lamindb:

import lamindb as ln

✅ loaded instance: testuser1/mydata (lamindb 0.51.0)

We simulate the raw data processing of Schmidt22 with toy data in a real world setting with multiple collaborators (here testuser1 and testuser2):

assert ln.setup.settings.user.handle == "testuser1"

bfx_run_output = ln.dev.datasets.dir_scrnaseq_cellranger(

"perturbseq", basedir=ln.settings.storage, output_only=False

)

ln.track(ln.Transform(name="Chromium 10x upload", type="pipeline"))

ln.File(bfx_run_output.parent / "fastq/perturbseq_R1_001.fastq.gz").save()

ln.File(bfx_run_output.parent / "fastq/perturbseq_R2_001.fastq.gz").save()

Show code cell output

✅ saved: Transform(id='2Gwjq1pTD7eMBP', name='Chromium 10x upload', type='pipeline', updated_at=2023-08-28 13:54:05, created_by_id='DzTjkKse')

✅ saved: Run(id='2JNx4mvl5KxkA1uo4bgb', run_at=2023-08-28 13:54:05, transform_id='2Gwjq1pTD7eMBP', created_by_id='DzTjkKse')

💡 file in storage 'mydata' with key 'fastq/perturbseq_R1_001.fastq.gz'

💡 file in storage 'mydata' with key 'fastq/perturbseq_R2_001.fastq.gz'

Track a bioinformatics pipeline#

When working with a pipeline, we’ll register it before running it.

This only happens once and could be done by anyone on your team.

ln.setup.login("testuser2")

✅ logged in with email testuser2@lamin.ai and id bKeW4T6E

❗ record with similar name exist! did you mean to load it?

| id | __ratio__ | |

|---|---|---|

| name | ||

| Test User1 | DzTjkKse | 90.0 |

✅ saved: User(id='bKeW4T6E', handle='testuser2', email='testuser2@lamin.ai', name='Test User2', updated_at=2023-08-28 13:54:06)

transform = ln.Transform(name="Cell Ranger", version="7.2.0", type="pipeline")

ln.User.filter().df()

| handle | name | updated_at | ||

|---|---|---|---|---|

| id | ||||

| DzTjkKse | testuser1 | testuser1@lamin.ai | Test User1 | 2023-08-28 13:54:04 |

| bKeW4T6E | testuser2 | testuser2@lamin.ai | Test User2 | 2023-08-28 13:54:06 |

transform

Transform(id='gWd973slbGqaIt', name='Cell Ranger', version='7.2.0', type='pipeline', created_by_id='bKeW4T6E')

ln.track(transform)

✅ saved: Transform(id='gWd973slbGqaIt', name='Cell Ranger', version='7.2.0', type='pipeline', updated_at=2023-08-28 13:54:06, created_by_id='bKeW4T6E')

✅ saved: Run(id='WnefvAB77JsFt9MPhySh', run_at=2023-08-28 13:54:06, transform_id='gWd973slbGqaIt', created_by_id='bKeW4T6E')

Now, let’s stage a few files from an instrument upload:

files = ln.File.filter(key__startswith="fastq/perturbseq").all()

filepaths = [file.stage() for file in files]

💡 adding file Gf4QsqxPkIiCGTTrrc59 as input for run WnefvAB77JsFt9MPhySh, adding parent transform 2Gwjq1pTD7eMBP

💡 adding file CP0gGchaMIwFIegysGRX as input for run WnefvAB77JsFt9MPhySh, adding parent transform 2Gwjq1pTD7eMBP

Assume we processed them and obtained 3 output files in a folder 'filtered_feature_bc_matrix':

output_files = ln.File.from_dir("./mydata/perturbseq/filtered_feature_bc_matrix/")

ln.save(output_files)

Show code cell output

✅ created 3 files from directory using storage /home/runner/work/lamin-usecases/lamin-usecases/docs/mydata and key = perturbseq/filtered_feature_bc_matrix/

Let’s look at the data lineage at this stage:

output_files[0].view_lineage()

And let’s keep running the Cell Ranger pipeline in the background.

Show code cell content

transform = ln.Transform(

name="Preprocess Cell Ranger outputs", version="2.0", type="pipeline"

)

ln.track(transform)

[f.stage() for f in output_files]

filepath = ln.dev.datasets.schmidt22_perturbseq(basedir=ln.settings.storage)

file = ln.File(filepath, description="perturbseq counts")

file.save()

✅ saved: Transform(id='AAoRtj4w0X3egT', name='Preprocess Cell Ranger outputs', version='2.0', type='pipeline', updated_at=2023-08-28 13:54:06, created_by_id='bKeW4T6E')

✅ saved: Run(id='AQtkG4OkUIrLPYqR4Qn5', run_at=2023-08-28 13:54:06, transform_id='AAoRtj4w0X3egT', created_by_id='bKeW4T6E')

💡 adding file lP28OYTqv8Prf8s1BPln as input for run AQtkG4OkUIrLPYqR4Qn5, adding parent transform gWd973slbGqaIt

💡 adding file NqQ90aex3CQvdYDoPmsn as input for run AQtkG4OkUIrLPYqR4Qn5, adding parent transform gWd973slbGqaIt

💡 adding file jgWqeGfFwVAamkN82yfG as input for run AQtkG4OkUIrLPYqR4Qn5, adding parent transform gWd973slbGqaIt

💡 file in storage 'mydata' with key 'schmidt22_perturbseq.h5ad'

💡 data is AnnDataLike, consider using .from_anndata() to link var_names and obs.columns as features

Track app upload & analytics#

The hidden cell below simulates additional analytic steps including:

uploading phenotypic screen data

scRNA-seq analysis

analyses of the integrated datasets

Show code cell content

# app upload

ln.setup.login("testuser1")

transform = ln.Transform(name="Upload GWS CRISPRa result", type="app")

ln.track(transform)

filepath = ln.dev.datasets.schmidt22_crispra_gws_IFNG(ln.settings.storage)

file = ln.File(filepath, description="Raw data of schmidt22 crispra GWS")

file.save()

# upload and analyze the GWS data

ln.setup.login("testuser2")

transform = ln.Transform(name="GWS CRIPSRa analysis", type="notebook")

ln.track(transform)

file_wgs = ln.File.filter(key="schmidt22-crispra-gws-IFNG.csv").one()

df = file_wgs.load().set_index("id")

hits_df = df[df["pos|fdr"] < 0.01].copy()

file_hits = ln.File(hits_df, description="hits from schmidt22 crispra GWS")

file_hits.save()

✅ logged in with email testuser1@lamin.ai and id DzTjkKse

✅ saved: Transform(id='lapgANZ3brHG4w', name='Upload GWS CRISPRa result', type='app', updated_at=2023-08-28 13:54:07, created_by_id='DzTjkKse')

✅ saved: Run(id='f0dBYuVnm9xJIrHixYBX', run_at=2023-08-28 13:54:07, transform_id='lapgANZ3brHG4w', created_by_id='DzTjkKse')

💡 file in storage 'mydata' with key 'schmidt22-crispra-gws-IFNG.csv'

✅ logged in with email testuser2@lamin.ai and id bKeW4T6E

✅ saved: Transform(id='0qgOkJBvG7rwWX', name='GWS CRIPSRa analysis', type='notebook', updated_at=2023-08-28 13:54:08, created_by_id='bKeW4T6E')

✅ saved: Run(id='Lr6JWp9k7WAEJZ0neBQa', run_at=2023-08-28 13:54:08, transform_id='0qgOkJBvG7rwWX', created_by_id='bKeW4T6E')

💡 adding file q4w1Lv1b9fAu4IaSxxHB as input for run Lr6JWp9k7WAEJZ0neBQa, adding parent transform lapgANZ3brHG4w

💡 file will be copied to default storage upon `save()` with key `None` ('.lamindb/pGVuvtCsZVuBGKRWmqhD.parquet')

💡 data is a dataframe, consider using .from_df() to link column names as features

✅ storing file 'pGVuvtCsZVuBGKRWmqhD' at '.lamindb/pGVuvtCsZVuBGKRWmqhD.parquet'

Let’s see what the data lineage of this looks:

file = ln.File.filter(description="hits from schmidt22 crispra GWS").one()

file.view_lineage()

In the backgound, somebody integrated and analyzed the outputs of the app upload and the Cell Ranger pipeline:

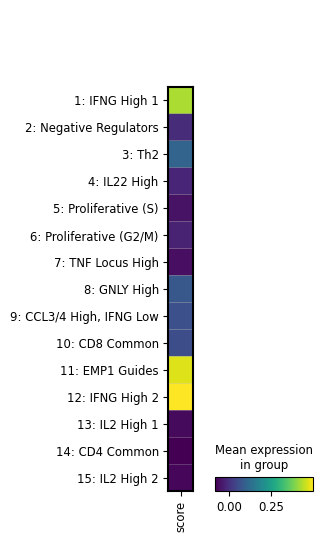

Show code cell content

# Let us add analytics on top of the cell ranger pipeline and the phenotypic screening

transform = ln.Transform(

name="Perform single cell analysis, integrating with CRISPRa screen",

type="notebook",

)

ln.track(transform)

file_ps = ln.File.filter(description__icontains="perturbseq").one()

adata = file_ps.load()

screen_hits = file_hits.load()

import scanpy as sc

sc.tl.score_genes(adata, adata.var_names.intersection(screen_hits.index).tolist())

filesuffix = "_fig1_score-wgs-hits.png"

sc.pl.umap(adata, color="score", show=False, save=filesuffix)

filepath = f"figures/umap{filesuffix}"

file = ln.File(filepath, key=filepath)

file.save()

filesuffix = "fig2_score-wgs-hits-per-cluster.png"

sc.pl.matrixplot(

adata, groupby="cluster_name", var_names=["score"], show=False, save=filesuffix

)

filepath = f"figures/matrixplot_{filesuffix}"

file = ln.File(filepath, key=filepath)

file.save()

✅ saved: Transform(id='lduDnaLYJ3GJQ1', name='Perform single cell analysis, integrating with CRISPRa screen', type='notebook', updated_at=2023-08-28 13:54:08, created_by_id='bKeW4T6E')

✅ saved: Run(id='Zo7kda45OR5MhnixhyZp', run_at=2023-08-28 13:54:08, transform_id='lduDnaLYJ3GJQ1', created_by_id='bKeW4T6E')

💡 adding file s2nQ8TJv4m72cZjmpgzR as input for run Zo7kda45OR5MhnixhyZp, adding parent transform AAoRtj4w0X3egT

💡 adding file pGVuvtCsZVuBGKRWmqhD as input for run Zo7kda45OR5MhnixhyZp, adding parent transform 0qgOkJBvG7rwWX

WARNING: saving figure to file figures/umap_fig1_score-wgs-hits.png

💡 file will be copied to default storage upon `save()` with key 'figures/umap_fig1_score-wgs-hits.png'

✅ storing file 'tGtZ2JTQ6aZvcba0Uo37' at 'figures/umap_fig1_score-wgs-hits.png'

WARNING: saving figure to file figures/matrixplot_fig2_score-wgs-hits-per-cluster.png

💡 file will be copied to default storage upon `save()` with key 'figures/matrixplot_fig2_score-wgs-hits-per-cluster.png'

✅ storing file '2JUxm5pRwuudVUPSR1mp' at 'figures/matrixplot_fig2_score-wgs-hits-per-cluster.png'

The outcome of it are a few figures stored as image files. Let’s query one of them and look at the data lineage:

Track notebooks#

We’d now like to track the current Jupyter notebook to continue the work:

ln.track()

💡 notebook imports: ipython==8.14.0 lamindb==0.51.0 scanpy==1.9.4

✅ saved: Transform(id='1LCd8kco9lZUz8', name='Bird's eye view', short_name='birds-eye', version='0', type=notebook, updated_at=2023-08-28 13:54:10, created_by_id='bKeW4T6E')

✅ saved: Run(id='QSvArTZTnyV5hukpCn6D', run_at=2023-08-28 13:54:10, transform_id='1LCd8kco9lZUz8', created_by_id='bKeW4T6E')

Visualize data lineage#

Let’s load one of the plots:

file = ln.File.filter(key__contains="figures/matrixplot").one()

from IPython.display import Image, display

file.stage()

display(Image(filename=file.path))

💡 adding file 2JUxm5pRwuudVUPSR1mp as input for run QSvArTZTnyV5hukpCn6D, adding parent transform lduDnaLYJ3GJQ1

We see that the image file is tracked as an input of the current notebook. The input is highlighted, the notebook follows at the bottom:

file.view_lineage()

Alternatively, we can also purely look at the sequence of transforms and ignore the files:

transform = ln.Transform.search("Bird's eye view", return_queryset=True).first()

transform.parents.df()

| name | short_name | version | initial_version_id | type | reference | updated_at | created_by_id | |

|---|---|---|---|---|---|---|---|---|

| id | ||||||||

| lduDnaLYJ3GJQ1 | Perform single cell analysis, integrating with... | None | None | None | notebook | None | 2023-08-28 13:54:10 | bKeW4T6E |

transform.view_parents()

Understand runs#

We tracked pipeline and notebook runs through run_context, which stores a Transform and a Run record as a global context.

File objects are the inputs and outputs of runs.

What if I don’t want a global context?

Sometimes, we don’t want to create a global run context but manually pass a run when creating a file:

run = ln.Run(transform=transform)

ln.File(filepath, run=run)

When does a file appear as a run input?

When accessing a file via stage(), load() or backed(), two things happen:

The current run gets added to

file.input_ofThe transform of that file gets added as a parent of the current transform

You can then switch off auto-tracking of run inputs if you set ln.settings.track_run_inputs = False: Can I disable tracking run inputs?

You can also track run inputs on a case by case basis via is_run_input=True, e.g., here:

file.load(is_run_input=True)

Query by provenance#

We can query or search for the notebook that created the file:

transform = ln.Transform.search("GWS CRIPSRa analysis", return_queryset=True).first()

And then find all the files created by that notebook:

ln.File.filter(transform=transform).df()

| storage_id | key | suffix | accessor | description | version | initial_version_id | size | hash | hash_type | transform_id | run_id | updated_at | created_by_id | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| id | ||||||||||||||

| pGVuvtCsZVuBGKRWmqhD | yTQlccVf | None | .parquet | DataFrame | hits from schmidt22 crispra GWS | None | None | 18368 | O2Owo0_QlM9JBS2zAZD4Lw | md5 | 0qgOkJBvG7rwWX | Lr6JWp9k7WAEJZ0neBQa | 2023-08-28 13:54:08 | bKeW4T6E |

Which transform ingested a given file?

file = ln.File.filter().first()

file.transform

Transform(id='2Gwjq1pTD7eMBP', name='Chromium 10x upload', type='pipeline', updated_at=2023-08-28 13:54:05, created_by_id='DzTjkKse')

And which user?

file.created_by

User(id='DzTjkKse', handle='testuser1', email='testuser1@lamin.ai', name='Test User1', updated_at=2023-08-28 13:54:07)

Which transforms were created by a given user?

users = ln.User.lookup()

ln.Transform.filter(created_by=users.testuser2).df()

| name | short_name | version | initial_version_id | type | reference | updated_at | created_by_id | |

|---|---|---|---|---|---|---|---|---|

| id | ||||||||

| gWd973slbGqaIt | Cell Ranger | None | 7.2.0 | None | pipeline | None | 2023-08-28 13:54:06 | bKeW4T6E |

| AAoRtj4w0X3egT | Preprocess Cell Ranger outputs | None | 2.0 | None | pipeline | None | 2023-08-28 13:54:06 | bKeW4T6E |

| 0qgOkJBvG7rwWX | GWS CRIPSRa analysis | None | None | None | notebook | None | 2023-08-28 13:54:08 | bKeW4T6E |

| lduDnaLYJ3GJQ1 | Perform single cell analysis, integrating with... | None | None | None | notebook | None | 2023-08-28 13:54:10 | bKeW4T6E |

| 1LCd8kco9lZUz8 | Bird's eye view | birds-eye | 0 | None | notebook | None | 2023-08-28 13:54:10 | bKeW4T6E |

Which notebooks were created by a given user?

ln.Transform.filter(created_by=users.testuser2, type="notebook").df()

| name | short_name | version | initial_version_id | type | reference | updated_at | created_by_id | |

|---|---|---|---|---|---|---|---|---|

| id | ||||||||

| 0qgOkJBvG7rwWX | GWS CRIPSRa analysis | None | None | None | notebook | None | 2023-08-28 13:54:08 | bKeW4T6E |

| lduDnaLYJ3GJQ1 | Perform single cell analysis, integrating with... | None | None | None | notebook | None | 2023-08-28 13:54:10 | bKeW4T6E |

| 1LCd8kco9lZUz8 | Bird's eye view | birds-eye | 0 | None | notebook | None | 2023-08-28 13:54:10 | bKeW4T6E |

We can also view all recent additions to the entire database:

ln.view()

Show code cell output

File

| storage_id | key | suffix | accessor | description | version | initial_version_id | size | hash | hash_type | transform_id | run_id | updated_at | created_by_id | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| id | ||||||||||||||

| 2JUxm5pRwuudVUPSR1mp | yTQlccVf | figures/matrixplot_fig2_score-wgs-hits-per-clu... | .png | None | None | None | None | 28814 | JYIPcat0YWYVCX3RVd3mww | md5 | lduDnaLYJ3GJQ1 | Zo7kda45OR5MhnixhyZp | 2023-08-28 13:54:10 | bKeW4T6E |

| tGtZ2JTQ6aZvcba0Uo37 | yTQlccVf | figures/umap_fig1_score-wgs-hits.png | .png | None | None | None | None | 118999 | laQjVk4gh70YFzaUyzbUNg | md5 | lduDnaLYJ3GJQ1 | Zo7kda45OR5MhnixhyZp | 2023-08-28 13:54:09 | bKeW4T6E |

| pGVuvtCsZVuBGKRWmqhD | yTQlccVf | None | .parquet | DataFrame | hits from schmidt22 crispra GWS | None | None | 18368 | O2Owo0_QlM9JBS2zAZD4Lw | md5 | 0qgOkJBvG7rwWX | Lr6JWp9k7WAEJZ0neBQa | 2023-08-28 13:54:08 | bKeW4T6E |

| q4w1Lv1b9fAu4IaSxxHB | yTQlccVf | schmidt22-crispra-gws-IFNG.csv | .csv | None | Raw data of schmidt22 crispra GWS | None | None | 1729685 | cUSH0oQ2w-WccO8_ViKRAQ | md5 | lapgANZ3brHG4w | f0dBYuVnm9xJIrHixYBX | 2023-08-28 13:54:07 | DzTjkKse |

| s2nQ8TJv4m72cZjmpgzR | yTQlccVf | schmidt22_perturbseq.h5ad | .h5ad | AnnData | perturbseq counts | None | None | 20659936 | la7EvqEUMDlug9-rpw-udA | md5 | AAoRtj4w0X3egT | AQtkG4OkUIrLPYqR4Qn5 | 2023-08-28 13:54:06 | bKeW4T6E |

| NqQ90aex3CQvdYDoPmsn | yTQlccVf | perturbseq/filtered_feature_bc_matrix/features... | .tsv.gz | None | None | None | None | 6 | pH0SHVJ0gPf50hxbBAKrcw | md5 | gWd973slbGqaIt | WnefvAB77JsFt9MPhySh | 2023-08-28 13:54:06 | bKeW4T6E |

| jgWqeGfFwVAamkN82yfG | yTQlccVf | perturbseq/filtered_feature_bc_matrix/matrix.m... | .mtx.gz | None | None | None | None | 6 | kxY-SEHvkRUQfytaIFUd8A | md5 | gWd973slbGqaIt | WnefvAB77JsFt9MPhySh | 2023-08-28 13:54:06 | bKeW4T6E |

Run

| transform_id | run_at | created_by_id | reference | reference_type | |

|---|---|---|---|---|---|

| id | |||||

| 2JNx4mvl5KxkA1uo4bgb | 2Gwjq1pTD7eMBP | 2023-08-28 13:54:05 | DzTjkKse | None | None |

| WnefvAB77JsFt9MPhySh | gWd973slbGqaIt | 2023-08-28 13:54:06 | bKeW4T6E | None | None |

| AQtkG4OkUIrLPYqR4Qn5 | AAoRtj4w0X3egT | 2023-08-28 13:54:06 | bKeW4T6E | None | None |

| f0dBYuVnm9xJIrHixYBX | lapgANZ3brHG4w | 2023-08-28 13:54:07 | DzTjkKse | None | None |

| Lr6JWp9k7WAEJZ0neBQa | 0qgOkJBvG7rwWX | 2023-08-28 13:54:08 | bKeW4T6E | None | None |

| Zo7kda45OR5MhnixhyZp | lduDnaLYJ3GJQ1 | 2023-08-28 13:54:08 | bKeW4T6E | None | None |

| QSvArTZTnyV5hukpCn6D | 1LCd8kco9lZUz8 | 2023-08-28 13:54:10 | bKeW4T6E | None | None |

Storage

| root | type | region | updated_at | created_by_id | |

|---|---|---|---|---|---|

| id | |||||

| yTQlccVf | /home/runner/work/lamin-usecases/lamin-usecase... | local | None | 2023-08-28 13:54:04 | DzTjkKse |

Transform

| name | short_name | version | initial_version_id | type | reference | updated_at | created_by_id | |

|---|---|---|---|---|---|---|---|---|

| id | ||||||||

| 1LCd8kco9lZUz8 | Bird's eye view | birds-eye | 0 | None | notebook | None | 2023-08-28 13:54:10 | bKeW4T6E |

| lduDnaLYJ3GJQ1 | Perform single cell analysis, integrating with... | None | None | None | notebook | None | 2023-08-28 13:54:10 | bKeW4T6E |

| 0qgOkJBvG7rwWX | GWS CRIPSRa analysis | None | None | None | notebook | None | 2023-08-28 13:54:08 | bKeW4T6E |

| lapgANZ3brHG4w | Upload GWS CRISPRa result | None | None | None | app | None | 2023-08-28 13:54:07 | DzTjkKse |

| AAoRtj4w0X3egT | Preprocess Cell Ranger outputs | None | 2.0 | None | pipeline | None | 2023-08-28 13:54:06 | bKeW4T6E |

| gWd973slbGqaIt | Cell Ranger | None | 7.2.0 | None | pipeline | None | 2023-08-28 13:54:06 | bKeW4T6E |

| 2Gwjq1pTD7eMBP | Chromium 10x upload | None | None | None | pipeline | None | 2023-08-28 13:54:05 | DzTjkKse |

User

| handle | name | updated_at | ||

|---|---|---|---|---|

| id | ||||

| bKeW4T6E | testuser2 | testuser2@lamin.ai | Test User2 | 2023-08-28 13:54:08 |

| DzTjkKse | testuser1 | testuser1@lamin.ai | Test User1 | 2023-08-28 13:54:07 |

Show code cell content

!lamin login testuser1

!lamin delete --force mydata

!rm -r ./mydata

✅ logged in with email testuser1@lamin.ai and id DzTjkKse

💡 deleting instance testuser1/mydata

✅ deleted instance settings file: /home/runner/.lamin/instance--testuser1--mydata.env

✅ instance cache deleted

✅ deleted '.lndb' sqlite file

❗ consider manually deleting your stored data: /home/runner/work/lamin-usecases/lamin-usecases/docs/mydata